Recovering Data from a Damaged Drive

I recently recovered data from a damaged external hard drive for a friend. I've done it before, but learned a few new things this time and wanted to share. These notes are applicable to physically damaged drives (bad sectors) rather than logical corruption. I presume some level of competency because I write these posts for my future self.

Side note: Portable HDDs were never a good idea

Portable HDDs were a necessary step in portable data storage evolution, and they're still around half the cost per-GB, but storing all your data eggs in one aluminium basket spinning at 7,200 RPM is not a great idea, at least not on its own.

The simplest way to keep your data safe is redundancy - just keep more than one copy of your data. At a minimum manually copy things you care about from one computer to another sometimes, or keep a copy on your computer and use an external drive only as a backup in case you accidentally delete something. Paying for some kind of cloud storage might be an even better idea if you can afford a subscription.

Use Linux

My friend's drive was damaged in a way that made Windows take one look at it and say "I'm not touching that." That's an extreme position to take. Linux on the other hand was happy to try.

Important! Only mount damaged filesystems read-only! The filesystem driver may choose to do strange things when it encounters errors. Your best chance for recovery requires leaving the bits just as they are.

If you are going to use Windows to do recovery I've had some success with EaseUS. It's not Free, but neither is Windows, and professional recovery is expensive (for good reasons).

ntfsclone

If the damage is not too extensive and the filesystem is in a state that will allow it, ntfsclone (part of NTFS-3G / ntfsprogs) will copy only the blocks that are still relevant to the filesystem. This will save you a ton of time compared to reading every block on the drive.

Unfortunately I tried other things first this time and got the filesystem into a state where ntfsclone refused to try. Or maybe the damage was too extensive. In any case it didn't work this time, but the last time I had to do this it was extremely helpful.

ddrescue

Depending on the size of the drive and how many damaged sectors there are this could take a long time - on the order of days or weeks - but it's worth it. GNU ddrescue reads data off the damaged drive, at first skipping the bad blocks, but later going back to retry them, try them in smaller chunks, try them forwards and backwards, etc., all the while keeping track of which blocks have been successfully copied.

# ddrescue --sparse --idirect /dev/sda recovery.{img,map}

GNU ddrescue 1.25

Press Ctrl-C to interrupt

Initial status (read from mapfile)

rescued: 1000 GB, tried: 60336 kB, bad-sector: 21972 kB, bad areas: 8656

Current status

ipos: 105688 MB, non-trimmed: 0 B, current rate: 0 B/s

opos: 105688 MB, non-scraped: 38351 kB, average rate: 0 B/s

non-tried: 0 B, bad-sector: 21985 kB, error rate: 0 B/s

rescued: 1000 GB, bad areas: 8656, run time: 2m 21s

pct rescued: 99.99%, read errors: 25, remaining time: n/a

time since last successful read: n/a

Scraping failed blocks... (forwards)

Size is a problem, but not as big a problem as you'd think. My friend's 1TB HDD had about 130GB of data on it, and using the --sparse option meant that the total size of the image file I copied their drive to was only 150GB (this includes leftover data from deleted files, and filesystem metadata, but doesn't include the damaged portions).

$ du --human-readable --apparent-size recovery.img

932G recovery.img

$ du --human-readable recovery.img

149G recovery.img

Speed is also a problem. The NTFS driver on Linux is extremely CPU hungry (or was on the Fedora 32 system I was using). I got the best performance by using an intermediate drive (instead of the replacement 1TB drive my friend also provided) using an EXT4 filesystem. Reading bad blocks also takes longer than reading good blocks because various layers between you and the tiny magnetic fields storing the data will retry. Using the --idirect option helped it register the errors quickly and move on, though it moved slower while reading good blocks.

losetup

Once you have an image of the drive that has a much data as you can sit around waiting for ddrescue to recover you'll need to mount it (again read-only) so that you can pull the files off it. You can do this using loopback devices (losetup).

# losetup --find --read-only --partscan --show recovery.img

/dev/loop0

# ls /dev/loop0*

/dev/loop0 /dev/loop0p1

# mount -o ro /dev/loop0p1 /mnt/

rsync

Now that you've got a mounted filesystem that won't block waiting for a damaged drive to try to read bad sectors, the only step remaining is to copy what you can to the new drive. rsync is the obvious choice. One important reason for that is that if your copy gets interrupted rsync can almost pick up where it left off (it will check metadata for changes before it gets going again). I keep it simple by using the --archive option.

Sidetracks

Here are some things I tried that I wish I hadn't, but were interesting in some way.

I was worried I wouldn't have enough space on my intermediate drive to store the full image file. One reason I worried about this is that it's possible for a drive to not be full of null bytes wherever there's empty space. null bytes can be stored as sparse blocks on modern filesystems but there's no guarantee that your drive isn't instead full of random data or that every unused bit is a 1 instead of a 0. It turns out that the HDD I was recovering was initialized to 0s, so I shouldn't have worried. It's possible the drive had a lot of files deleted from it so that the recovery would include a lot of old deleted data. None of this was the case, and none of it was likely. All of it lead me to try some dumb things. In the end I had enough space and I lost a lot of time.

Btrfs

Sometimes pronounced butterfs or even betterfs but apparently standing for "b-tree filesystem", Btrfs supports on-the-fly compression, so I thought maybe it would be a good idea to use that to avoid outgrowing my intermediate disk. It didn't help much (if at all) and it made everything take a lot longer.

QCOW2

The QEMU Copy-on-Write 2 disk image format also supports compression. QEMU includes the qemu-nbd utility that can be used to let you connect a QEMU-supported image to a running Linux system using the nbd (network block device) driver. This also made everything slow enough I didn't have the patience for it.

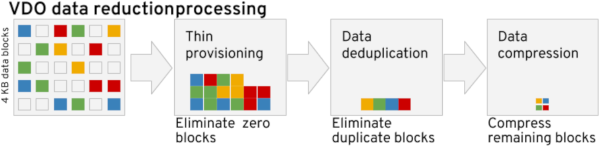

VDO

Not one to be daunted by other failed attempts I also tried a compression and deduplication system called Virtual Data Optimizer. This is an officially supported feature of Red Hat Enterprise Linux, so I thought "hey, this'll be great!" And it really is great in theory. I'll probably use it for something else some day. But again, way too slow to be a good idea.

Summary

If I had this to do over again, I'd avoid ever touching the damaged drive with something that might try to write to it, I'd copy the whole thing using ddrescue, and I'd depend on the Linux NTFS-3G drivers to just do their best at getting recovered files off the recovered volume. I'd avoid any false starts and just accept that it's going to take a very long time.

Afterword: TestDisk

If you're recovering deleted files, or deleted partitions, TestDisk is supposed to be able to do some great things for you. I haven't used it in a long time, but It's worth keeping in your recovery toolbox.